It is a well-known fact that Python is one of the most popular programming languages for data mining and Web Scraping. There are tons of libraries and niche scrapers around the community, but we’d like to share the 5 most popular of them.

Meaning: Miss an opportunity. Example: The chance for promotion PASSED me BY. Notes: Separable obligatory International English. Phrasal verb search × Phrasal verbs Aqui es donde se ha de mostrar el HTML. Close Learn Phrasal verbs Phrasal verbs: pass. Share this Advertisements. To go past without stopping. The Carnival parade passes by my house every year. Syntax: intransitive. Pass over phrasal verb meaning. To happen without affecting someone or something She feels that life is passing her by (= that she is not enjoying the opportunities and pleasures of life). The whole business passed him by (= he was hardly aware that it was happening). See pass by in the Oxford Advanced Learner's Dictionary. Pass somebody/something. To happen without affecting somebody/something. She feels that life is passing her by (= that she is not enjoying the opportunities and pleasures of life). The whole business passed him by (= he was hardly aware that it was happening). See pass by in the Oxford Advanced American Dictionary. Pass by sth I pass by the post office on my way to work, so I can drop in and post your parcel if you like. Pass by I hate it when you signal an empty taxi and it just passes by without stopping. Phrasal verbs grammar. 1000 Phrasal Verbs in Context ebook.

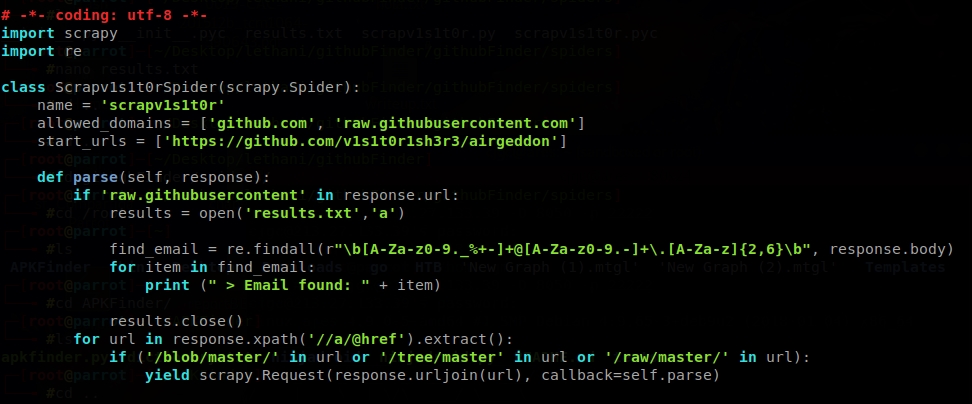

Github Python Web Scraper

May 25, 2020 Building Web Scraper Using Python In this section, we will look at the step by step guide on how to build a basic web scraper using python Beautiful Soup module. First of all, to get the HTML source code of the web page, send an HTTP request to the URL of that web page one wants to access. Before reading it, please read the warnings in my blog Learning Python: Web Scraping. Scrapy is an application framework for crawling web sites and extracting structured data which can be used for a wide range of useful applications, like data mining, information processing or historical archival.

Most of these libraries' advantages can be received by using our API and some of these libraries can be used in stack with it.

The Top 5 Python Web Scraping Libraries in 2020#

1. Requests#

Well known library for most of the Python developers as a fundamental tool to get raw HTML data from web resources.

To install the library just execute the following PyPI command in your command prompt or Terminal:

After this you can check installation using REPL:

- Official docs URL: https://requests.readthedocs.io/en/latest/

- GitHub repository: https://github.com/psf/requests

2. LXML#

When we’re talking about the speed and parsing of the HTML we should keep in mind this great library called LXML. This is a real champion in HTML and XML parsing while Web Scraping, so the software based on LXML can be used for scraping of frequently-changing pages like gambling sites that provide odds for live events.

To install the library just execute the following PyPI command in your command prompt or Terminal: Microsoft train simulator add ons free download.

The LXML Toolkit is a really powerful instrument and the whole functionality can’t be described in just a few words, so the following links might be very useful:

- Official docs URL: https://lxml.de/index.html#documentation

- GitHub repository: https://github.com/lxml/lxml/

3. BeautifulSoup#

Probably 80% of all the Python Web Scraping tutorials on the Internet uses the BeautifulSoup4 library as a simple tool for dealing with retrieved HTML in the most human-preferable way. Selectors, attributes, DOM-tree, and much more. The perfect choice for porting code to or from Javascript's Cheerio or jQuery.

To install this library just execute the following PyPI command in your command prompt or Terminal:

As it was mentioned before, there are a bunch of tutorials around the Internet about BeautifulSoup4 usage, so do not hesitate to Google it!

- Official docs URL: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

- Launchpad repository: https://code.launchpad.net/~leonardr/beautifulsoup/bs4

4. Selenium#

Selenium is the most popular Web Driver that has a lot of wrappers suitable for most programming languages. Quality Assurance engineers, automation specialists, developers, data scientists - all of them at least once used this perfect tool. For the Web Scraping it’s like a Swiss Army knife - there are no additional libraries needed because any action can be performed with a browser like a real user: page opening, button click, form filling, Captcha resolving, and much more.

To install this library just execute the following PyPI command in your command prompt or Terminal:

The code below describes how easy Web Crawling can be started with using Selenium:

Github Python Web Scraper Github

As this example only illustrates 1% of the Selenium power, we’d like to offer of following useful links:

- Official docs URL: https://selenium-python.readthedocs.io/

- GitHub repository: https://github.com/SeleniumHQ/selenium

5. Scrapy#

Scrapy is the greatest Web Scraping framework, and it was developed by a team with a lot of enterprise scraping experience. The software created on top of this library can be a crawler, scraper, and data extractor or even all this together.

To install this library just execute the following PyPI command in your command prompt or Terminal:

We definitely suggest you start with a tutorial to know more about this piece of gold: https://docs.scrapy.org/en/latest/intro/tutorial.html

As usual, the useful links are below:

- Official docs URL: https://docs.scrapy.org/en/latest/index.html

- GitHub repository: https://github.com/scrapy/scrapy

What web scraping library to use?#

So, it’s all up to you and up to the task you’re trying to resolve, but always remember to read the Privacy Policy and Terms of the site you’re scraping 😉.

- Introduction

Introduction

Before reading it, please read the warnings in my blog Learning Python: Web Scraping.

Scrapy is an application framework for crawling web sites and extracting structured data which can be used for a wide range of useful applications, like data mining, information processing or historical archival.You can install Scrapy via pip.

Don’t use the python-scrapy package provided by Ubuntu, they are typically too old and slow to catch up with latest Scrapy.Instead, use pip install scrapy to install.

Basic Usage

After installation, try python3 -m scrapy --help and get help information:

A basic flow of Scrapy usage:

- Create a new Scrapy project.

- Write a spider to crawl a site and extract data.

- Export the scraped data using the command line.

- Change spider to recursively follow links.

- Try to use other spider arguments.

Create a Project

Create a new Scrapy project:

Then it will create a directory like:

Create a class of our own spider which is the subclass of scrapy.Spider in the file soccer_spider.py under the soccer/spiders directory.

name: identifies the Spider. It must be unique within a project.start_requests(): must return an iterable of Requests which the Spider will begin to crawl from.parse(): a method that will be called to handle the response downloaded for each of the requests made.- Scrapy schedules the

scrapy.Requestobjects returned by thestart_requestsmethod of the Spider.

Running Spider

Go to the soccer root directory and run the spider using runspider or crawl commands: All box crack collection download.

When getting the page content in response.body or in a local saved file, you could use other libraries such as Beautiful Soup to parse it.Here, I will continue use the methods provided by Scrapy to parse the content.

Extracting Data

Scrapy provides CSS selectors .css() and XPath .xpath() for the response object. Some examples:

With that, you can extract the data according to the elements, CSS styles or XPath. Add the codes in the parse() method.

Sometimes, you may want to extract the data from another link in the page.Then you can find the link and get the response by sending another request like:

Use the .urljoin() method to build a full absolute URL (since sometimes the links can be relative).

Scrapy also provides another method .follow() that supports relative URLs directly.

Example

I will still use the data in UEFA European Cup Matches 2017/2018 as an example.

The HTML content in the page looks like:

I developed a new class extends the scrapy.Spider class and then run it via Scrapy to extract the data.

I prefer using XPath because it is more flexible.Learn more about XPath in XML and XPath in W3Schools or other tutorials.

Further

You can use other shell commands such as python3 -m scrapy shell 'URL' to do some testing job before writing your own spider.

More information about Scrapy in detail can be found in Scrapy Official Documentation or its GitHub.

References

Please enable JavaScript to view the comments powered by Disqus.blog comments powered by DisqusPublished

Tags